Movie idea: the priest who spoke to Salieri in “Amadeus” is so moved by his story that he begins incorporating the appreciation of music into his faith and teachings.

Herbie Hancock’s Chamelion is a classic. No argument there. But my all time HH fave has got to be Hang Up Your Hang Ups.

Everyone’s looking forward to Halloweeen but I just want to get to that extra hour of sleep two days later.

To everyone in their 20s and 30s, and I cannot emphasize this enough: start investing in your retirement plan NOW if you haven’t already. Your 50+ year old self will thank you.

I got news for you. At 50, every little inconvenience suddenly becomes a huge pain in the ass. And a job is really nothing more than a series of little inconveniences. Otherwise why would they pay you? “I’m getting too old for this shit” becomes your whole lifestyle.

So, I implore you: put that money to work now.

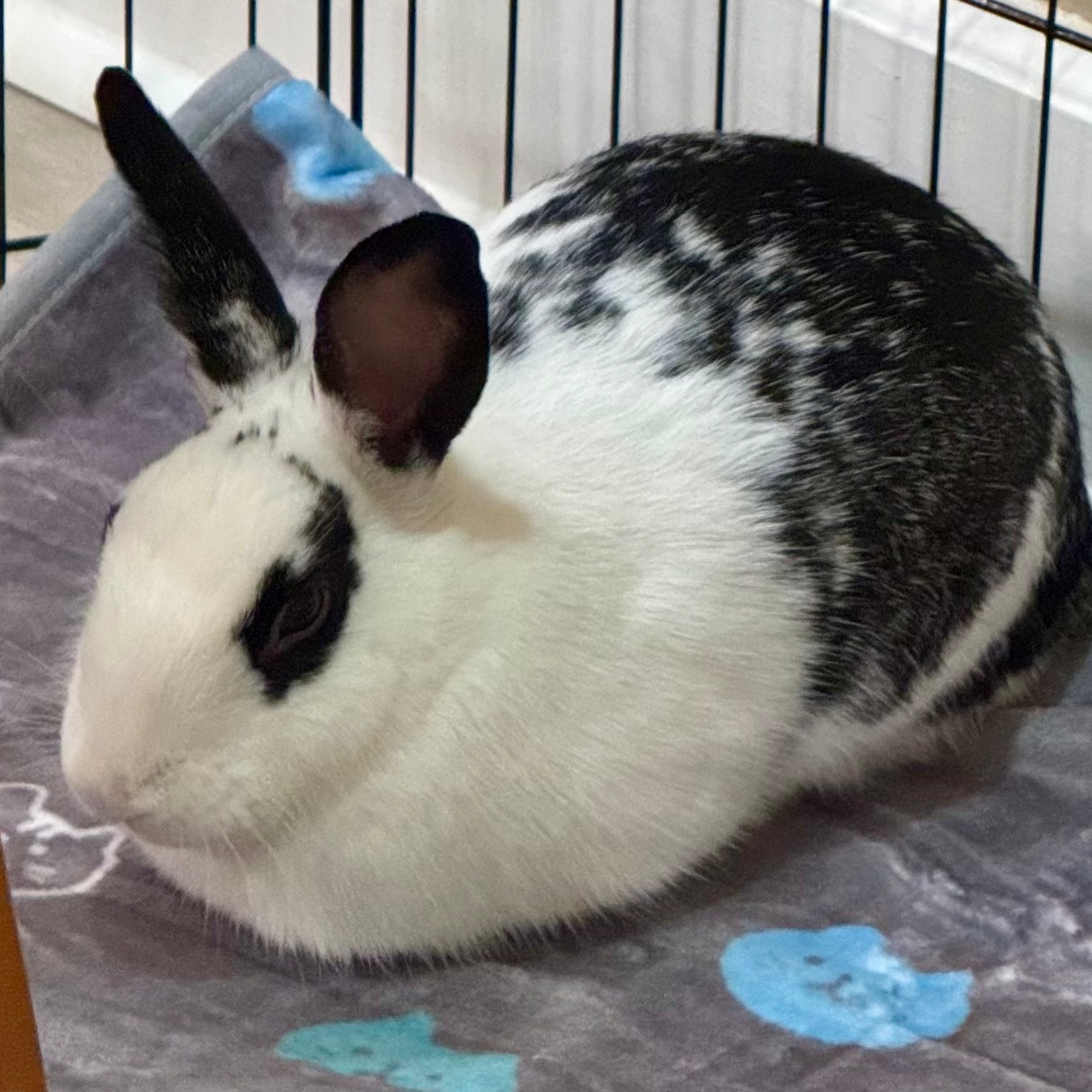

Allow me to share a few pics of our rabbit. This is our bunny Ollie. He’s a rescue. English spot mix.

We never even considered having any kind of pet (having kids is already overwhelming) but one of our daughters (19 yo) decided to get a bunny over the spring. His name was Panther. We had to say goodbye to Panther way too soon and let me say, I never expected to have experienced grief like that over my daughter’s pet. But here we are. Ollie is helping us heal.

When I buy a new laptop, it’s always a MacBook Pro. I might never compile code on it or run sophisticated music or video editing software on it. Heck, I’ll probably only ever browse the web, check email, and pay bills with it.

So why waste money on such an over-powered machine? Simple: I’m afraid of it becoming obsolete too soon. I reckon over-doing it on the specs will get me an extra couple of years out of it. ¯(°_o)/¯

Apparently, due to the AWS outage, someone was able to rob the Louvre. They should not have chosen US-EAST-1.

You heard it here first.

Happy 4th of July, y’all! 🇺🇸